Building the Shepherd.com Web Engine

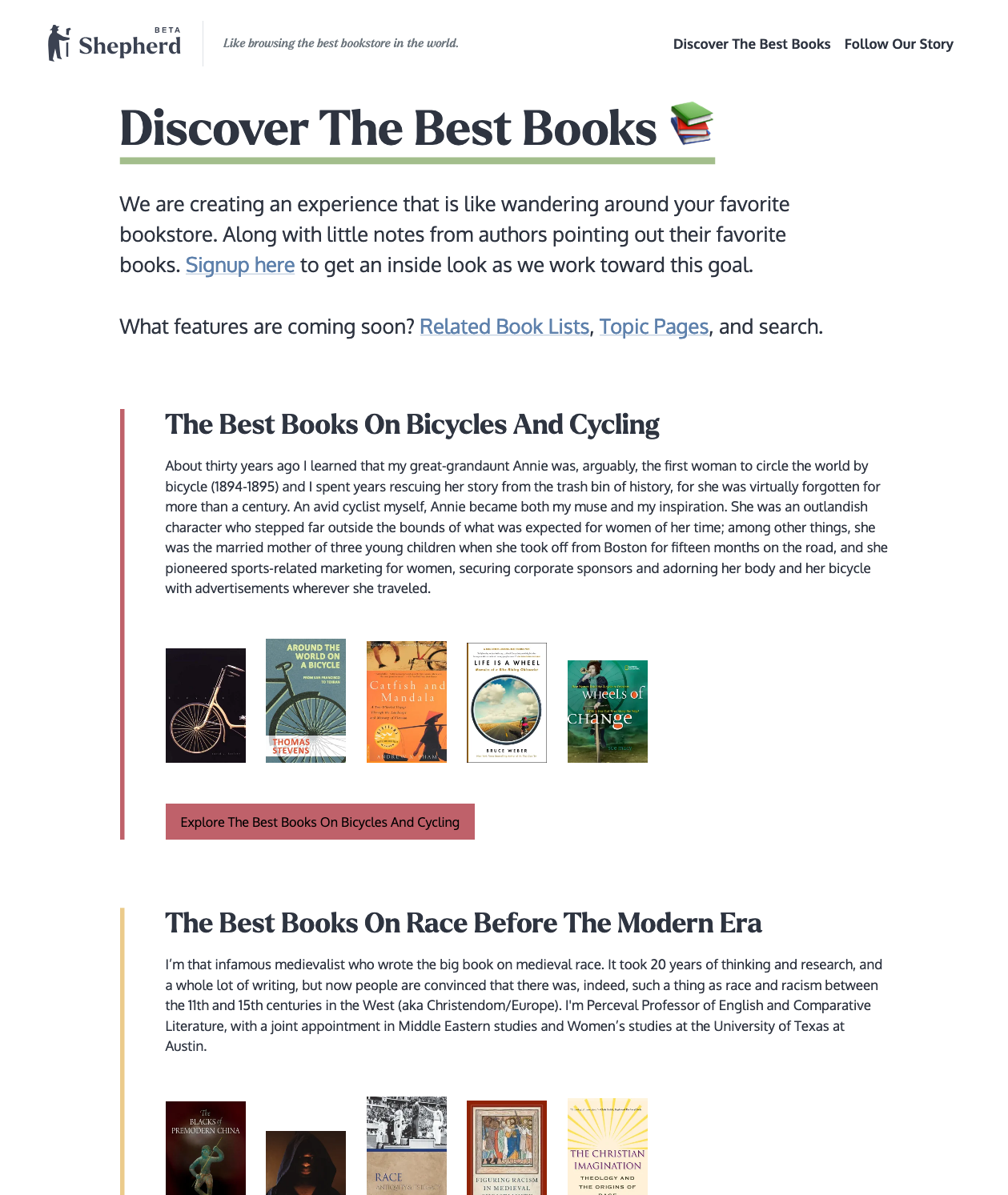

Some time in late 2020 I got approached by Ben Fox, founder and entrepreneur, who needed help building his new venture Shepherd.com - “A better way to discover amazing books”. It is a website of curated book recommendations by authors and famous people organized around topics.

As a book lover, I quickly got excited and agreed to build the website and a content management system (CMS) behind it. The website launched publicly today (20 April 2021), and following is the story of building the software that powers it. Ben runs a diary and newsletter from the founder’s perspective and if you happen to be a book author interested in promoting a book or project, get in touch here.

How it started?

When Ben reached out to me, he already had a clear picture on what he wants and had elaborate design wireframes. The initial requirements were written down in Google Docs which allowed us to quickly come up with a roadmap with estimated costs.

The first plan was quite simple:

- Build a headless CMS so that creating website content can be started as soon as possible.

- Build a “minimum viable product” (MVP) website and launch as soon as possible.

- Elaborate advanced website and CMS features after launch.

Research on book metadata and cover image APIs

At the beginning it seemed like we will want to have an external data source on book metadata and high quality book covers. Even though various options exist, this is a surprisingly hard problem to solve for several reasons:

- The data quality wildly varies especially for non-English publications or niche topics.

- Book cover quality varies even more, it’s hard to cater for modern designs with high resolution images.

- Quality sources can get really expensive.

- Licensing and usage rights are usually restrictive and complicated.

Based on Ben’s original shortlist, here is a brief summary of the options we looked at:

- Amazon: you need to be an Amazon Associate Program member to use their APIs, which was not an option at this point.

- Google Books: easy to use REST API, great search (surprise!), low quality covers only. Free but restricted.

- Ingram: awful SOAP API (documentation is 56 pages), high quality metadata with high(ish) resolution covers. Expensive and restrictive.

- ISBNdb: simple REST API but the data quality is not impressive. Cheap, restrictive.

- OpenLibrary: simple REST API. Free and anyone can contribute data, it’s the Wikipedia of book databases (or the Wild West of it…)

- Bowker a not too bad XML REST API, some books have high resolution covers. Not free.

In the end we decided not to use any of these APIs and rely on manual book data entry until later when it becomes clearer what the business demands.

Project management and collaboration

Since it is a two-men team with Ben and me for most of the time, we kept tooling lightweight. Ben writes down the initial requirements for each milestone or major group of features in Google Docs, where we use comments and collaborative editing until clarified and agreed on everything.

We initially used checklists within Google Docs for keeping track of tasks and progress but that quite soon turned out to be not flexible enough due to lack of useable long conversation threads. We now keep track of everything in GitHub Issues which perfectly does the job. I briefly also considered using GitHub project boards but found it a little awkward to use and probably an overkill for this team size. We do use issue milestones though to organize and schedule stories, bugs and other tasks into larger buckets.

Our day-to-day communication is also very lightweight. We do conversations on GitHub Issues when there is one and exchange emails regularly on other topics. We have not seen the need for any sort of real-time, synchronous collaboration so far. I also find the GitHub notification inbox very useful for keeping up with conversations happening on issues.

Software stack

I used the following inputs to decide on what software stack to use:

- Ben wanted to get to a working CMS as quickly as possible.

- There were no particular user interface or visual design requirements for the CMS part and compromises were acceptable if they speed up delivery.

- The CMS was to be used by Ben a very few selected administrators.

- There was no user generated content for the immediate roadmap.

- The public website consists of mostly static pages with minimal to no interactions other than following links.

- High quality books covers were essential parts of the content.

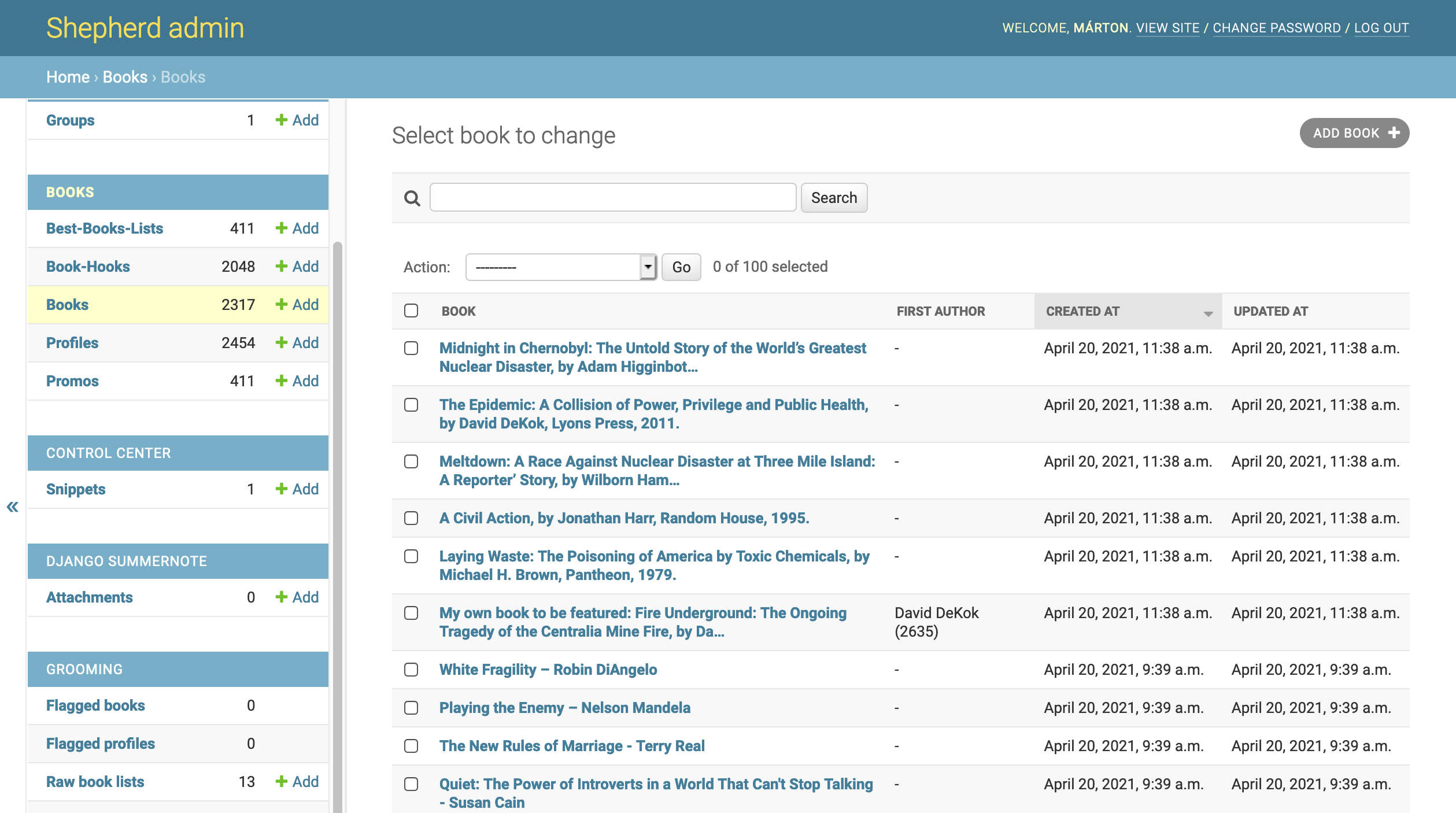

Given these criteria I decided for the Django web framework:

- It is a “batteries included” framework that allows focusing on building features at light speed.

- It is mature. Having existed for 15 years, I know I won’t run into major issues or lack of documentation and all my possible questions have already been answered on StackOverflow.

- Django comes with an automatically generated admin interface which works very well for basic CMS purposes.

- It assumes a relational database for which it also automatically generates and manages schema migrations allowing to offload most of the heavy-lifting part of working with data to the database server.

- Given the mostly static nature of the public facing website, scaling Django is not a major challenge.

So far no regrets for choosing Django and using the built-in admin for managing the content. There are definitely a few places where I stretched how far one can go customizing the admin and had to go deeper in Django’s source code than I wished for but this has been more an exception than something common so far.

Infrastructure

Given that the system was to be built by me in a solo fashion (collaborating with Ben on the requirements and content building side and a designer responsible for the look of the public website) spending time on anything that’s not closely related to the main goal was out of question. One such area where time and money can saved is setting up and managing infrastructure, also known as DevOps.

For this reason I decided to deploy the application to Heroku. It is not considered to be the “hottest thing” nowadays but it does one thing well: it runs applications with little to no setup or maintenance. The price can go up steeply if your capacity needs grow but that’s a good problem to have. As Heroku did not require me to write my application in any vendor-specific way (except for following the twelve factor principles which is useful on most competing platforms) it’s easy to move elsewhere once the extra effort is financially justified.

Heroku also offers managed PostgreSQL which allows me to not care about database security and backups. It even comes with a 4 days window of point-in-time rollback which drastically improves my quality of sleep.

Uploading, storing, resizing and serving images for book covers and author portraits is an important part of the software but not something worth building ourselves. Heroku does not offer any sort of static hosting itself but they have partnered with Cloudinary which is a CDN and a media management service in one. Setting up Cloudinary with Django integration took me about 30 minutes and we have working uploads and images resized to all responsible design needs.

We use Cloudflare as a CDN, caching and security layer in front of the Django application. Because Heroku offers no static file hosting and Django also recommends solving the problem externally, Cloudflare CDN came handy for serving stylesheets, fonts, images and other static assets. Another selling point for Cloudflare was their cache which we can use for saving a huge amount of Heroku resources by serving a cached version of infrequently changing but still dynamically generated pages.

I am positive that this infrastructure will serve the project for long enough after the public launch so that we can identify growth patterns and make a plan to evolving it (or not) while also building the next round of features.

Continuous delivery

I strongly believe in continuous delivery from day zero of any project. We have a decent amount of automated tests mostly of integration and unit kind, which are ridiculously fast in Python/Django. Most test exercise the whole stack from the HTTP middleware all the way down to an in-memory SQLite database and the entire test suite still completes within a few seconds.

Code quality checks (tests and Pylint rules) alongside of verifying code formatting conformity with Black is done on each commit using GitHub Actions. If the checks succeed, the code is automatically deployed to Heroku.

We do have a staging environment as a separate Heroku app with dedicated database. Just like the production environment, it is also automatically deployed on each commit. We use staging for making sure database migrations apply without issues, to play around with content without messing up the public website and sometimes for previewing features.

Alternatives considered

There are a few alternatives we have or could have considered:

- WordPress: we could have taken WordPress off the shelf at one of the many providers but keeping the data structure and workflow heavily customized to our needs was a must from day one which is not something WordPress easily allows.

- Django CMS: might be a feasible option for the future but at the beginning a heavyweight CMS did not seem justified.

- Ruby on Rails: a reasonable alternative but I’ve been doing more Python work than Ruby recently.

- Laravel: if I had any experience with Laravel, it could have also been an option, but I have none.

There are probably plenty of others worth considering, but these were the ones that briefly crossed my mind at the beginning.

The future

There is a long list of features we will keep shipping in the near future. Time will tell how well the infrastructure holds under the load but I don’t expect surprises here. Once we start adding more dynamic or user generated content that does not play nice with heavy caching we might need to reconsider some parts and even move to a cheaper hosting provider from Heroku once we can justify the extra DevOps cost.

If everything goes well, I will write a follow-up post here. (Maybe also when things go bad, but that “should not happen”;)

Have comments or questions? Let’s discuss on Hacker News!